Generative AI has taken the world by storm, and for a good reason. This groundbreaking technology can tackle various tasks, from writing marketing copy to creating and editing code. LangChain is one of the most influential open-source frameworks for generative AI applications. But what if it doesn't meet your needs? It may have a steep learning curve or lacks the functionality you want. In any case, there are plenty of alternatives to LangChain out there. In this article, we'll identify the top LangChain alternatives to help you enhance your product's generative AI capabilities. By the end, you'll have the tools to implement an option that meets your needs and knowledge about how to build AI.

One valuable resource to help you achieve your objectives is Lamatic's solution, the generative AI tech stack. This tool features a no-code interface that makes it easy to understand LangChain and its alternatives. It also has a wealth of templates and examples to help you hit the ground running with your chosen LangChain alternative.

What is LangChain and Its Key Capabilities

LangChain is a powerful framework for creating applications powered by large language models (LLMs). Its open-source architecture offers developers a centralized interface for connecting, building, and integrating applications with various LLMs. The framework’s primary purpose is to simplify the development of LLM-driven applications like chatbots and virtual agents.

To this end, LangChain has a versatile toolset available in the Python and JavaScript libraries. The framework streamlines the creation of LLM applications by offering user-friendly abstraction capabilities.

What Are LangChain’s Key Features?

LangChain offers developers a modular approach to building LLM applications. It allows for dynamic, prompt comparison and utilizes different foundation models with minimal code changes. The framework provides an efficient environment for LLM application development, enabling users to experiment with configurations without extensive code modifications. This flexibility helps developers discover the best setups for their use cases.

In addition to its modular structure, LangChain’s user-friendly tools simplify the creation process for LLM applications. They make creating chatbots and virtual assistants easier, integrating them with external data sources and connecting them to existing software workflows.

Why Would You Want a LangChain Alternative?

LangChain’s genesis as an open-source project in 2022 rapidly transformed it into a startup, attracting many tools vying to pose as alternatives. Despite some overlap, these alternatives were crafted for slightly distinct purposes that may better suit specific project requirements or could complement LangChain. Although LangChain strives for convenience, it ironically poses a series of challenges.

The Complexity of LangChain

The complex web it creates has led to allegations of unnecessary complication, leaving developers questioning its true motives. Critics suggest that LangChain exacerbates the complexities it aims to mitigate instead of simplifying the path to LLMs. Numerous noteworthy observations highlight how LangChain’s intricate approach distracts novices from directly engaging with the core of AI, acting as a bewildering intermediary.

Related Reading

- Gen AI vs AI

- GenAI Applications

- Generative AI Customer Experience

- Generative AI Automation

- Generative AI Risks

- How to Create an AI App

- AI Product Development

- GenAI Tools

- Enterprise Generative AI Tools

- Generative AI Development Services

- AI Frameworks

35 Best LangChain Alternatives

1. Lamatic - Managed Generative AI Tech Stack

Lamatic offers a fully managed generative AI tech stack. Features include:

- Managed GenAI Middleware

- Custom GenAI API (GraphQL)

- Low-Code Agent Builder

- Automated GenAI Workflow (CI/CD)

- GenOps (DevOps for GenAI)

- Edge Deployment via Cloudflare Workers

- Integrated Vector Database (Weaviate)

Lamatic empowers teams to rapidly implement GenAI solutions without accruing tech debt. Our platform automates workflows and ensures production-grade deployment on the edge, enabling fast, efficient GenAI integration for products needing swift AI capabilities.

Lamatic is a comprehensive managed platform that combines a low-code visual builder, Weaviate, and integrations with various apps and models. It enables users to build, test, and deploy high-performance GenAI applications on the edge in minutes.

Key offerings of Lamatic.ai include:

- Intuitive Managed Backend: Facilitates drag-and-drop connectivity to your:

- Preferred models

- Data sources

- Tools

- Applications Collaborative Development Environment: Enables teams to collaboratively build, test, and refine sophisticated GenAI workflows in a unified space

- Seamless Prototype to Production Pipeline: Features weaviate and self-documenting GraphQL API that automatically scales on Serverless Edge infrastructure

Start building GenAI apps for free today with our managed generative AI tech stack.

2. n8n

N8n is a powerful source-available low-code platform that combines AI capabilities with traditional workflow automation. This approach allows users with varying levels of expertise to build custom AI applications and integrate them into business workflows. As one of the leading LangChain alternatives, n8n offers an intuitive drag-and-drop interface for building AI-powered tools like:

- Chatbots

- Automated processes

It balances ease of use and functionality, allowing for low-code development while enabling advanced customization. Key features include:

- LangChain Integration: Utilize LangChain powerful modules with a user-friendly environment and additional features.

- Flexible Deployment: Choose between cloud-hosted or self-hosted solutions to meet security and compliance requirements.

- Advanced AI Components: Implement chatbots, personalized assistants, document summarization, and more using pre-built AI nodes.

- Custom Code Support: Add custom JavaScript / Python code when needed.

- LangChain Vector Store Compatibility: Integrate with various vector databases for efficient storage and retrieval of embeddings.

- Memory Management: Implement context-aware AI applications with built-in memory options for ongoing conversations

- RAG (Retrieval-Augmented Generation) Support: Enhance AI responses with relevant information from custom data sources.

- Scalable Architecture: Handles enterprise-level workloads with a robust, scalable infrastructure.

One of n8n's distinguishing features is its extensive library of pre-built connectors. This vast ecosystem of integrations allows users to incorporate AI capabilities into existing business processes—from customer support to data analytics and beyond.

3. Flowise

Flowise is an open-source, low-code platform for creating customized LLM applications. It offers a drag-and-drop user interface and integrates with popular frameworks like:

- LangChain

- LlamaIndex

Nevertheless, users should remember that while Flowise simplifies many aspects of AI development, it can still prove difficult to master for those unfamiliar with the concepts of LangChain or LLM applications.

In addition, developers may resort to the code-first approaches other LangChain platforms offer for highly specialized or performance-critical applications. Key features include:

- Integration with popular AI frameworks such as LangChain and LlamaIndex

- Support for multi-agent systems and RAG

- Extensive library of pre-built nodes and integrations

- Tools to analyze and troubleshoot chatflows and agentflows (these are two types of apps you can build with Flowise).

4. Langflow

Langflow is an open-source visual framework for building multi-agent and RAG applications. Langflow smoothly integrates with the LangChain ecosystem, generating Python and LangChain code for production deployment. This feature bridges the gap between visual development and code-based implementation, giving developers the best of both worlds.

Langflow also excels in providing LangChain tools and components. These pre-built elements allow developers to quickly add functionality to their AI applications without coding from scratch.

Key features include:

- Drag-and-drop interface for building AI workflows.

- Integration with various LLMs, APIs, and data sources.

- Python and LangChain code generation for deployment.

5. LlamaIndex

LlamaIndex is a powerful data framework designed for building LLM applications. It provides a set of tools for:

- Data ingestion

- Indexing and querying

This makes it an excellent choice for developers looking to create context-augmented AI applications. Key features include:

- Extensive data connectors for various sources and formats

- Advanced vector store capabilities with support for 40+ vector stores

- Powerful querying interface, including RAG implementations.

- Flexible indexing capabilities for various use cases.

6. txtai

txtai is an all-in-one embedding database that offers a comprehensive solution for:

- Semantic search

- LLM orchestration

- Language model workflows

It combines vector indexes, graph networks and relational databases to enable advanced features like:

- Vector search with SQL

- Topic modeling and RAG

txt can function independently or as a knowledge source for LLM prompts. Its support for Python and YAML-based configurations enhances its flexibility, making it accessible to developers with different preferences and skill levels.

The framework also offers API bindings for JavaScript, Java, Rust and Go, extending its use across different tech stacks. Key features include:

- Vector search with SQL integration.

- Multimodal indexing for text, audio, images and video.

- Language model pipelines for various NLP tasks.

- Workflow orchestration for complex AI processes.

7. Haystack

Haystack is a versatile open-source framework for building production-ready LLM applications, including:

Chatbots

Intelligent search solutions

RAG LangChain alternatives

Its extensive documentation, tutorials, and active community support make it an attractive option for junior and experienced LLM developers. Key features include:

- Modular architecture with customizable components and pipelines

- Support for multiple model providers such as Hugging Face, OpenAI, Cohere

- Integration with various document stores and vector databases

- Advanced retrieval techniques, such as Hypothetical Document Embeddings (HyDE), can significantly improve the quality of the context retrieved for LLM prompts.

8. CrewAI

CrewAI is a framework for orchestrating role-playing autonomous AI agents. CrewAI stands out for its ability to create a "crew" of AI agents, each with specific roles, goals, and backstories. For instance, you can have a researcher agent gathering information, a writer agent crafting content and an editor agent refining the final output – all working in concert within the same framework.

Key features include:

- Multi-agent orchestration with defined roles and goals

- Flexible task management with sequential and hierarchical processes

- Integration with various LLMs and third-party tools

- Advanced memory and caching capabilities for context-aware interactions.

9. SuperAGI

SuperAGI is a powerful open-source LangChain framework alternative for building, managing and running autonomous AI agents at scale. Unlike frameworks focusing solely on local development or building simple chatbots, SuperAGI provides comprehensive tools and features for creating production-ready AI agents.

One of SuperAGI's strengths is its extensive toolkit system, which is reminiscent of LangChain's tools but with a more production-oriented approach. These toolkits allow agents to interact with external systems and third-party services, making it easy to create agents that can perform complex real-world tasks.

Key features include:

- Autonomous Agent Provisioning: Easily build and deploy scalable AI agents

- Extensible Toolkit System: Enhance agent capabilities with various integrations that are similar to LangChain tools

- Performance Telemetry: Monitor and optimize agent performance in real-time

- Multi-Vector DB Support: Connect to different vector databases to improve agent knowledge.

10. Autogen

AutoGen is a Microsoft framework that builds and orchestrates AI agents to solve complex tasks. Comparing Autogen to LangChain, it's important to note that while both frameworks aim to simplify the development of LLM-powered applications, they have different approaches and strengths.

LangChain focuses on chaining together different components for language model applications, while AutoGen emphasizes multi-agent interactions and conversations. Autogen is more advantageous if you need autonomous AI agents capable of independently executing tasks and generating content with minimal intervention.

Key features include:

- Multi-agent conversation framework.

- Customizable and conversable agents.

- Enhanced LLM inference with caching and error handling

- Diverse conversation patterns for complex workflows.

11. Langroid

Langroid is an intuitive, lightweight, and extensible Python framework for building LLM-powered applications. It offers a fresh approach to LLM app development, focusing on simplifying the developer experience. Langroid utilizes a Multi-Agent paradigm inspired by the Actor Framework, allowing developers to:Set up Agents

Equip them with optional components (LLM, vector store and tools/functions)

Assign tasks and have them collaboratively solve problems through message exchange.

While Langroid offers a fresh take on LLM app development, it’s important to note that it doesn’t use LangChain, which may require some adjustment for developers. This independence allows Langroid to implement optimized approaches to common LLM application challenges.

Key features include:

- Multi-Agent Paradigm: Inspired by the Actor framework, enables collaborative problem-solving; Intuitive API: Simplified developer experience for quick setup and deployment

- Extensibility: Easy integration of custom components and tools

- Production-Ready: Designed for scalable and efficient real-world applications.

12. Rivet

Rivet stands out among promising LangChain alternatives for production environments by offering a unique combination of visual programming and code integration. This open-source tool provides a desktop application for creating complex AI agents and prompt chains. While tools like Flowise and Langflow focus primarily on visual development, Rivet bridges the gap between visual programming and code integration: a visual approach to AI agent creation can significantly speed up development, whereas Rivet TypeScript library allows visually created graphs to be executed in existing applications.

Key features include:

- Unique combination of a node-based visual editor for AI agent development with a TypeScript library for real-time execution

- Support for multiple LLM providers such as OpenAI, Anthropic, AssemblyAI

- We offer live and remote debugging capabilities, which allow developers to monitor and troubleshoot AI agents in real time, even when deployed on remote servers.

13. Semantic Kernel

Semantic Kernel is a LangChain alternative developed by Microsoft to integrate LLMs into applications. It stands out for its multi-language support, offering C#, Python, and Java implementations. This makes Semantic Kernel attractive to a wider range of developers, especially those working on existing enterprise systems written in C# or Java.

Semantic Kernel's Advanced Planning Capabilities

Another key strength of Semantic Kernel is its built-in planning capabilities. While LangChain offers similar functionality through its agents and chains, Semantic Kernel planners are designed to work with its plugin system, allowing for more complex and dynamic task orchestration.

Key features include:

- Plugin system for extending AI capabilities.

- Built-in planners for complex task orchestration.

- Flexible memory and embedding support.

- Enterprise-ready with security and observability features.

14. Hugging Face Transformers Agent

Hugging Face Transformers library has introduced an experimental agent system for building AI-powered applications. Transformers agents offer developers a promising alternative, especially those familiar with the Hugging Face ecosystem. Nevertheless, its experimental nature and complexity may make it less suitable for junior devs or rapid prototyping compared to more established frameworks like LangChain.

Key features include:

- Support for both open-source (HfAgent) and proprietary (OpenAiAgent) models

- Extensive default toolbox that includes:

- Document question answering

- Image question answering

- Speech-to-text, text-to-speech

- Translation

- And more

- Customizable Tools: Users can create and add custom tools to extend the agent's capabilities

- Smooth Integration with Hugging Face: Vast collection of models and datasets.

15. Outlines

Outlines is a framework focused on generating structured text. While LangChain provides a comprehensive set of tools for building LLM applications, Outlines aims to make LLM outputs more predictable and structured, following JSON schemas or Pydantic models. This can be particularly useful in scenarios where precise control over the format of the generated text is required.

Key features include:

- Multiple model integrations with OpenAI, transformers, llama.cpp, exllama2, Mamba.

- Powerful prompting primitives based on Jinja templating engine.

- Structured generation (multiple choices, type constraints, regex, JSON, grammar-based)

- Fast and efficient generation with caching and batch inference capabilities.

16. Claude Engineer

Claude Engineer is a LangChain Anthropic alternative that brings the capabilities of Claude-3/3.5 models directly to your command line. This tool provides a smooth experience for developers who prefer to work in a terminal environment. While it does not offer the visual workflow-building capabilities of low-code platforms like n8n or Flowise, the Claude Engineer command-line interface is suitable for developers who prefer a more direct, code-centric approach to AI-assisted development.

Key features include:

- Interactive chat interface with Claude 3 and Claude 3.5 models

- Extendable set of tools, including:

- File system operations

- Web search capabilities

- Image analytics

- Execution of Python code in isolated virtual environments.

- Advanced auto-mode for autonomous task completion.

17. Auto-GPT

Apart from deploying AI agents, Auto-GPT’s main goal centers around elevating GPT-4 into a fully self-reliant conversational AI. In contrast, LangChain is a toolkit that forges connections between various LLMs and utility packages, facilitating the creation of tailor-made applications.

Auto-GPT: A Powerful Tool with Room for Improvement

Unlike LangChain, Auto-GPT’s focus is on executing codes and commands to furnish precise, goal-driven solutions presented in a comprehensible manner. Notwithstanding its impressive attributes, it’s worth noting that, in its current state, Auto-GPT tends to become entangled in continuous loops of logic and intricate scenarios.

18. Priompt

Priompt (priority + prompt) is a JSX-based prompting library and open-source project that bills itself as a prompt design library, inspired by web design frameworks like React. Priompt’s philosophy is that, just as web design needs to adapt its content to different screen sizes, prompting should similarly adapt its content to different context window sizes.

Priompt uses absolute and relative priorities to determine what to include in the context window. It composes prompts using JSX, treating them as components that are created and rendered just like React.

Developers familiar with React or similar libraries might find the JSX-based approach intuitive and easy to adopt. Priompt’s actively maintained source code is on GitHub.

19. Humanloop

Humanloop is a low-code tool that helps developers, and product teams create LLM apps using technology like GPT-4. It focuses on improving AI development workflows by helping you design effective prompts and evaluate how well the AI performs these tasks.

Humanloop offers an interactive editor environment and playground, allowing technical and non-technical roles to work together to iterate on prompts. You use the editor for development workflows, including:

- Experimenting with new prompts and retrieval pipelines

- Fine-tuning prompts

- Debugging issues and comparing different models

- Deploying to different environments

- Creating your templates

- Humanloop has a website offering complete documentation and a GitHub repo for its source code.

20. Guidance

Guidance is a prompting library available in Jupyter Notebook format and Python, and enables you to control the generation of prompts by setting constraints, such as:

- Regular expressions (regex)

- Context-free grammars (CFGs)

A Versatile Tool for Prompt Engineering

You can also mix loops and conditional statements with prompt generation, allowing for more complex and customized prompts. Guidance helps users generate prompts flexibly and controlled by providing tools to specify patterns, conditions, and rules for prompt generation without chaining. It also provides multi-modal support. Guidance, along with its documentation, is available on GitHub.

21. Auto-GPT

Auto-GPT is a project consisting of four main components:

- A semi-autonomous LLM-powered generalist agent that you can interact with via a CLI

- Code and benchmark data to measure agent performance

- Boilerplate templates and code for creating your agents

- A flutter client for interacting with, and assigning tasks to agents

The idea behind Auto-GPT is that it executes a series of tasks for you by iteratively prompting until it finds the answer. It automates workflows to execute more complex tasks, such as financial portfolio management.

Your AI-Powered Coding Assistant

You provide input to Auto-GPT (or even keywords like “recipe” and “app”), and the agent figures out what you want to build—it tends to be especially useful for coding tasks involving automation, like generating server code or refactoring. Auto-GPT’s documentation is available on its website, and its source code (largely in JavaScript) can be found on GitHub.

22. AgentGPT

AgentGPT allows users to create and deploy autonomous AI agents directly in the browser. Agents typically take a single line of input (a goal) and execute multiple steps to reach the goal. The agent chains call to LLMs and are designed to:

- Understand objectives

- Implement strategies

- Deliver results without human intervention

A Versatile AI Agent Platform

You can interact with AgentGTP via its webpage or install it locally via CLI. The project also makes prompting templates available, which it imports from LangChain. AgentGPT offers a homepage where you can test the service and a GitHub repo from where you can download the code (mostly in Typescript).

23. MetaGPT

MetaGPT is a Python library that allows you to replicate the structure of a software company, complete with roles such as managers, engineers, architects, QAs, and others. It takes a single input line, such as “create a blackjack game,” and generates all the required artifacts, including:

- User stories

- Competitive analysis

- Requirements

- Tests

- A working Python implementation of the game

An interesting aspect about how this library works is that you can define any roles you want to fulfill a task, such as:

- Researcher

- Photographer

- Tutorial assistant

- Provide a list of tasks and activities to fulfill

MetaGPT has a website with an extensive list of use cases, a GitHub repo to see the source code, and a separate site for documentation.

24. Griptape

Griptape is a Python framework for developing AI-powered applications that enforce structures like sequential pipelines, DAG-based workflows, and long-term memory. It follows these design tenets. All framework primitives are useful and usable on their own and easy to plug into each other. It’s compatible with any capable LLM, data store, and backend through the abstraction of drivers.

Efficient Data Handling and Low-Latency Processing

When working with data through loaders and tools, it aims to efficiently manage large datasets and keep the data off prompt by default, making it easy to work with big data securely and with low latency. It’s much easier to reason about code written in a programming language like Python, not natural languages.

Griptape aims to default to Python in most cases unless necessary. Griptape features both a website for documentation and a repo on GitHub.

25. Instructor

Instructor is a Python library that eases the process of extracting structured data like JSON from a variety of LLMs, including proprietary models such as:

- GPT-3.5

- GPT-4

- GPT-4-Vision

- Other AI models and open-source alternatives

It supports functionalities like Function and Tool Calling alongside specialized sampling modes, improving ease of use and data integrity. It uses Pydantic for data validation and Tenacity for managing retries and offers a developer-friendly API that simplifies handling complex data structures and partial responses. Resources for Instructor include:

- Documentation site

- GitHub repository

26. Vellum AI

Vellum AI is a developer tool designed to streamline the development and management of production-grade LLM products. The platform facilitates prompt comparison and large-scale evaluation, allowing seamless integration into AI workflows with ready-to-use RAG and APIs. It also supports easy deployment and ongoing enhancements in production environments.

A Powerful and Flexible LLM Framework

Vellum is a strong alternative to Langchain. It offers a more advanced prompt engineering playground and a comprehensive workflow builder. It has a complete suite for evaluation and is highly customizable, designed to operate efficiently at scale. Vellum is compatible with all major LLM providers (proprietary and open-sourced).

27. Galileo

Galileo is great for improving and fine-tuning LLM applications because it has a wide range of features for:

- Quick engineering

- Debugging

- Observability

The Galileo Prompt Inspector and LLM Debugger let you manage and test prompts, giving you more control over how the model works and the output quality. Galileo Evaluate allows you to create, manage, and track all versions of your prompt templates. It supports A/B comparison of prompts and their results to optimize prompts effectively.

Galileo integrates with various LLM providers and orchestration libraries, such as:

- Langchain

- OpenAI

- Hugging Face

This allows users to transfer prompts seamlessly.

28. AutoChain

AutoChain is a lightweight and extensible framework for building generative AI agents. If you are familiar with Langchain, AutoChain is easy to navigate since they share similar but simpler concepts. It supports building agents using different custom tools and OpenAI function calling.

AutoChain includes simple memory tracking for conversation history and tools' outputs. AutoChain's automated multi-turn workflow evaluation with simulated conversations evaluates agent performance in complex scenarios.

AutoChain shares similar high-level concepts with LangChain and AutoGPT, lowering the learning curve for both experienced and novice users.

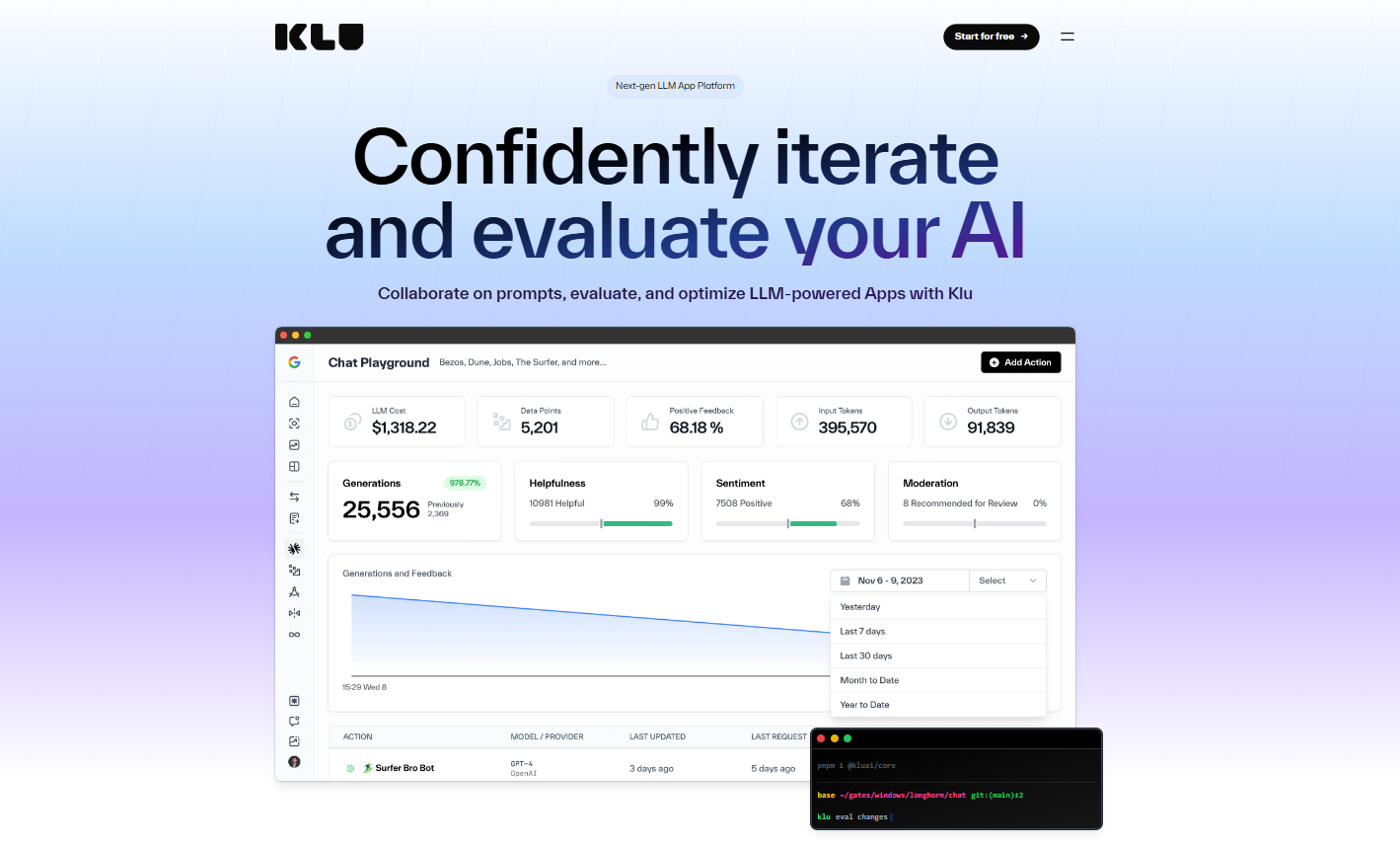

29. Klu.ai

Klu.ai is an LLM application platform with a unified API for accessing LLMs and integrating with diverse data sources and providers. It’s useful for prototyping, deploying multiple models, and optimizing AI-powered applications.

This product is a good option for organizations that want to accelerate the build-measure-learn loop and develop high-quality LLM applications. Klu.ai allows users to connect multiple actions to create workflows—abstractions for common LLM use cases, such as:

- LLM connectors

- Prompt templates

- Data management

Klu.ai integrates with multiple LLM providers, including:

- OpenAI

- Anthropic (Claude)

- AWS Bedrock

- HuggingFace

30. Braintrust

Braintrust is a platform for evaluating, improving, and deploying LLMs with tools for prompt engineering, data management, and continuous evaluation. It’s an excellent platform if you want to develop and monitor high-quality LLM applications at scale.

It provides a single API to access the world's LLMs from OpenAI, Anthropic, LLaMa 2, and Mistral, with built-in features like:

- Caching

- API key management

- Load balancing

It supports a long list of proprietary and open-source LLMs; you can add custom ones. Interact with the Braintrust through the Python and JavaScript (Node.js) SDKs.

31. HoneyHive

HoneyHive AI evaluates, debugs, and monitors production LLM applications. It lets you:

- Trace execution flows

- Customize event feedback

- Create evaluation

- Fine-tuning datasets from production logs

It is built for teams who want to build reliable LLM products because it focuses on observability through performance tracking. It integrates with Native SDKs in Python and Typescript, with additional support for languages like:

- Go

- Java

- Rust for Enterprise customers

It integrates with LangChain and LlamaIndex for logging traces and evaluating pipelines.

32. Parea AI

Parea AI is a platform for debugging, testing, and monitoring LLM applications. It provides developers with tools to experiment with prompts and chains, evaluate performance, and manage the entire LLM workflow from ideation to deployment. It is built for teams who want to build and optimize production-ready LLM products with detailed tracing and logging.

Parea provides a set of pre-built and custom evaluation metrics you can plug into your evaluation process. It includes the option to deploy prompts for your LLM applications and use them via the Python or TypeScript SDK.

33. SimpleAIChat

Simpleaichat is a Python package designed to streamline interactions with chat applications like ChatGPT and GPT-4. It features robust functionalities while maintaining code simplicity. This tool boasts a range of optimized features to achieve swift and cost-effective interactions with ChatGPT and other advanced AI models.

Users can effortlessly create and execute chat sessions by employing just a few lines of code. The package employs optimized workflows that curtail token consumption, effectively reducing costs and minimizing latency. The ability to concurrently manage multiple independent chats further enhances its utility.

Simpleaichat’s streamlined codebase eliminates the need to delve into intricate technical details. The package also supports asynchronous operations, including streaming responses and tool integration, and will soon support PaLM and Claude-2.

34. Fabric

Fabric is a no-code platform that effortlessly empowers users to create AI agents through its intuitive drag-and-drop interface. It’s an ideal tool for developers constructing expansive language model apps.

Fabric also caters to organizations seeking to develop LLM apps without hiring a dedicated developer. What sets Fabric apart from others on the list is its introduction of unique components, diverging from relying completely on LangChain’s components.

A notable feature of Fabric is its high level of customization, providing users the flexibility to incorporate various LLMs such as:

- Claude

- Llama

- And more

This flexibility enables users to go beyond GPT, tailoring the platform to their needs. This versatility enables users to leverage any Open-Source LLM based on their specific requirements when building AI agents with Fabric.

35. BabyAGI

BabyAGI presents itself as a Python script serving as an AI-driven task manager. It leverages OpenAI, LangChain, and vector databases, including Chroma and Pinecone to:

- Establish

- Prioritize

- Execute tasks

The Iterative Process of Task Selection and Execution

This involves selecting a task from a predefined list and relaying it to an agent. The agent, in turn, employs GPT-3.5-turbo by default and aims to accomplish the task based on contextual cues. The vector database then enhances and archives the outcome.

Subsequently, BabyAGI proceeds to generate fresh tasks and rearranges their priority based on the outcome and objective of the preceding task.

Related Reading

- Gen AI Architecture

- Generative AI Implementation

- Gen AI Platforms

- Generative AI Challenges

- Generative AI Providers

- How to Train a Generative AI Model

- Generative AI Infrastructure

- AI Middleware

- Top AI Cloud Business Management Platform Tools

- AI Tech Stack

5 Top Tips for Selecting a LangChain Alternative

1. Identify Your Goals and Requirements

Before choosing an alternative to LangChain, identify your goals and requirements to help narrow your options. Consider the specific capabilities you need, such as:

- Data retrieval

- Collaborative features

- Prompt engineering tools

- Evaluations

This will help you identify the LLM framework or platform that aligns with your business goals, different use cases, and application requirements.

2. Evaluate Ease of Use

Every LLM framework has a different learning curve, which can affect your team and project timelines. Consider the technical expertise required to use the alternative framework effectively. To simplify adoption, look for options with comprehensive documentation, active community/company support, and intuitive interfaces.

3. Assess Performance and Scalability

The performance of an LLM framework can vary widely. Investigate how well the framework handles your data volumes and delivers fast response times. Ensure it can scale seamlessly to accommodate growth without significant re-engineering. Benchmark the tool's efficiency in key areas like structured data handling, indexing, retrieval, and latency.

4. Prioritize Interoperability and Flexibility

When searching for alternatives to LangChain, prioritize options that integrate with your existing stack. Look for frameworks that use open, standard formats, or allow easy integration with your existing systems. The ability to work with various data sources, models, and downstream applications is crucial for avoiding vendor lock-in and adapting to evolving needs across various industries.

5. Conduct Proof-of-Concept Trials

Before committing to an alternative to LangChain, run focused experiments to validate its suitability for your use case. Engage your team to test the framework's core features, identify potential limitations, and gather feedback. Hands-on experience will provide valuable insights to inform your decision-making.

Start Building GenAI Apps for Free Today with Our Managed Generative AI Tech Stack

Lamatic offers a managed Generative AI Tech Stack. Our solution provides:

- Managed GenAI middleware

- Custom GenAI API (GraphQL)

- Low-code agent builder

- Automated GenAI workflow (CI/CD)

- GenOps (DevOps for GenAI)

- Edge deployment via Cloudflare workers

- Integrated vector database (Weaviate)

Lamatic empowers teams to rapidly implement GenAI solutions without accruing tech debt. Our platform automates workflows and ensures production-grade deployment on the edge, enabling fast, efficient GenAI integration for products needing swift AI capabilities.

Start building GenAI apps for free today with our managed generative AI tech stack.