Integrating generative AI into your workflow is all about finding the right platform for your needs. With so many options available, it can feel overwhelming to narrow them down. The good news? This article will help you understand the ins and outs of Gen AI Platforms so you can quickly and seamlessly integrate a generative AI platform that accelerates innovation and enhances your product’s capabilities without disrupting existing workflows.

Lamatic’s Generative AI Tech Stack is the ideal solution to help you achieve these objectives. By focusing on the platform's specific capabilities and how they can benefit your processes, you’ll be able to make a more informed decision about your integration and get to the good part of generative AI: the innovation it can bring to your business.

What Are the Guidelines for Enterprise Generative AI Models?

Generative AI can create new content, from text to images and audio, by learning patterns and structures from existing data. Enterprise generative AI applies this technology to business use cases, such as creating marketing copy or generating code. The enterprise AI models customarily used to perform these tasks are large language models (LLMs). LLMs are particularly good at understanding human language, making them helpful in automating communication and text-based tasks.

The Principles of Enterprise Generative AI

Enterprise generative AI systems are not all created equal. Businesses looking to implement these solutions should ensure they follow certain guidelines to minimize risks and optimize performance. At a minimum, an enterprise generative AI model should be:

- Trusted

- Consistent

- Controlled

- Explainable

- Reliable

- Secure

- Ethical

- Fair

- Licensed

- Sustainable

Let's break down what each of these principles means:

Trusted

Business leaders must ensure the generative AI model they use produces trustworthy outputs. For instance, most current LLMs can provide different outputs for the same input. This limits the reproducibility of testing, which can lead to releasing models that are not sufficiently tested.

Consistent

Business leaders need to ensure the generative AI model they use produces trustworthy outputs. For instance, most current LLMs can provide different outputs for the same input. This limits the reproducibility of testing, which can lead to releasing models that are not sufficiently tested.

Controlled

Enterprise generative AI models should be hosted in an environment (on-prem or cloud) where businesses can control the model at a granular level. The alternative is using online chat interfaces or APIs, like OpenAI’s LLM APIs. The disadvantage of relying on APIs is that the user may need to expose confidential, proprietary data to the API owner, increasing the attack surface for proprietary data. Global leaders like Amazon and Samsung experienced data leaks of internal documents and valuable source code when their employees used ChatGPT.

OpenAI later reversed its data retention policies and launched an enterprise offering. However, there are still risks to using cloud-based genAI systems. For example, the API provider or bad actors working at the API provider may:

- Access and use the enterprise’s confidential data to improve their solutions

- Accidentally leaking enterprise data

Explainable

Unfortunately, most generative AI models cannot explain why they provide specific outputs. This limits their use as enterprise users who would like to base critical decision-making on AI-powered assistants and want to know the data that drove such decisions. Explainable AI for LLMs is still an area of research.

Reliable

Hallucination (i.e., making up falsehoods) is a feature of LLMs and is unlikely to be resolved entirely. Enterprise genAI systems require the necessary processes and guardrails to ensure that humans minimize, detect, or identify harmful hallucinations before they can harm enterprise operations.

Secure

Enterprise-wide models may have interfaces for external users. Bad actors can use techniques like prompt injection to have the model perform unintended actions or share confidential data.

Ethical

Ethically trained enterprise generative AI models should be trained on ethically sourced data where Intellectual Property (IP) belongs to the enterprise or its supplier, and personal data is used with consent. Generative AI IP issues, such as training data that includes copyrighted content where the copyright doesn’t belong to the model owner, can lead to unusable models and legal processes.

Using personal information in training models can lead to compliance issues. For example, OpenAI’s ChatGPT must disclose its data collection policies and allow users to remove their data after the Italian Data Protection Authority (Garante) concerns. Read generative AI copyright issues & best practices to learn more.

Fair

Bias in training data can impact model effectiveness.

Licensed

The enterprise needs a commercial license to use the model. For example, models like Meta’s LLaMa have noncommercial licenses, which prevent their legal use in most use cases in a for-profit enterprise. Models with permissive licenses, like Vicuna built on top of LLaMa, also have noncommercial licenses since they leverage the LLaMa model.

Sustainable

Training generative AI models from scratch is expensive and consumes significant energy, contributing to carbon emissions. Business leaders should know the total cost of generative AI technology and identify ways to minimize its ecological and financial costs. Enterprises can strive towards most of these guidelines, which exist on a continuum; however, licensing, ethical concerns, and control issues are clear-cut.

It is evident how to achieve correct licensing and avoid ethical problems, but these are challenging goals. Achieving control requires firms to build their foundation models; however, most businesses need clarification about how to accomplish this.

How Can Enterprises Build Foundation Models?

There are two approaches to building your firm’s LLM infrastructure in a controlled environment.

Build Your Own Model (BYOM)

This approach allows world-class performance, costing a few million dollars, including computing (1.3 million GPU hours on 40GB A100 GPUs in case of BloombergGPT) and data science team costs.

Improve an Existing Model

1. Fine-tuning

This is a cheaper machine-learning technique for improving the performance of pre-trained large language models (LLMs) using selected datasets. Instruction fine-tuning was previously done with large datasets, but now it can be achieved with a small dataset (e.g., 1,000 curated prompts and responses in the case of LIMA). Early commercial LLM fine-tuning experiments highlight the importance of a robust data collection approach optimizing data quality and quantity.

Compute costs in research papers have been as low as $100 while achieving close to world-class performance. Model fine-tuning is an emerging domain with new approaches like Inference-Time Intervention (ITI), a strategy to reduce model hallucinations, published weekly.

2. Reinforcement Learning from Human Feedback (RLHF)

Human in-the-loop assessment can further improve a fine-tuned model.

3. Retrieval augmented generation (RAG)

This allows businesses to pass crucial information to models during generation time. Models can use this information to produce more accurate responses. Given the high costs involved in BYOM, we recommend businesses initially use optimized versions of existing models. Language model optimization is an emerging domain, with new approaches being developed weekly. Therefore, companies should be open to experimentation and ready to change their approach.

Related Reading

- How to Build AI

- Gen AI vs AI

- GenAI Applications

- Generative AI Customer Experience

- Generative AI Automation

- Generative AI Risks

- How to Create an AI App

- AI Product Development

- GenAI Tools

- Enterprise Generative AI Tools

- Generative AI Development Services

39 Best Enterprise Gen AI Platforms for Fast Deployment

1. Lamatic: Fast-Tracking GenAI App Development

Lamatic offers a comprehensive Generative AI tech stack that empowers teams to rapidly implement GenAI solutions without accruing tech debt. Our platform provides:

- Managed GenAI Middleware

- Custom GenAI API (GraphQL)

- Low Code Agent Builder

- Automated GenAI Workflow (CI/CD)

- GenOps (DevOps for GenAI)

- Edge deployment via Cloudflare workers

- Integrated Vector Database (Weaviate)

Lamatic empowers teams to rapidly implement GenAI solutions without accruing tech debt. Our platform automates workflows and ensures production-grade deployment on the edge, enabling fast, efficient GenAI integration for products needing swift AI capabilities.

Start building GenAI apps for free today with our generative AI tech stack.

2. ZBrain, Supercharging Operational Workflows

ZBrain is a leading enterprise generative AI platform that aims to reshape operational workflows by leveraging businesses’ proprietary data. This comprehensive, full-stack solution empowers businesses to develop secure Large Language Model-based applications with diverse Natural Language Processing (NLP) capabilities. Apps built on ZBrain simplify content creation, ensuring outputs align seamlessly with brand guidelines and business workflows. They excel in data analysis, extracting meaningful patterns and trends from data to provide actionable insights for informed decision-making.

Offering versatility across various industries, ZBrain integrates seamlessly with over 80 data sources and supports multiple LLMs such as:

- GPT-4

- PaLM 2

- Llama 2

- BERT

Its risk governance feature ensures data safety by identifying and mitigating risks, while ZBrain Flow enables intuitive business logic creation for apps without coding. With competitive pricing plans catering to diverse needs, ZBrain emerges as a powerful ally for enterprises seeking to optimize operations and leverage generative AI for enhanced productivity and innovation.

3. Scale, The Complete Toolkit for AI Integration

Scale is a leading force in the enterprise generative AI landscape with its all-encompassing platform, offering the complete toolkit for businesses looking for AI integration. Trusted by prominent AI teams globally, this platform facilitates generative AI applications’ seamless development and deployment. Tackling customization, performance, and security challenges, Scale collaborates with premier model providers:

- Boasts a robust data engine for continuous improvement

- Ensures independence from specific clouds or tools

Leveraging a tailored approach, Scale fine-tunes models with enterprise data and employs expert, prompt engineering for efficient application development. With its high-end features, Scale provides tangible solutions to critical business needs, promising a secure, scalable, and transformative journey into generative AI for enterprises.

4. C3: Transforming Knowledge Access for Enterprises

C3 generative AI stands at the forefront of enterprise generative AI applications, offering a unified knowledge source that transforms how businesses access, retrieve, and leverage critical insights. This cutting-edge solution combines natural language understanding, generative AI, reinforcement learning, and retrieval AI models within C3 AI’s patented model-driven architecture. Ensuring accurate responses traceable to ground truth, C3 generative AI provides rapid access to high-value insights while maintaining stringent enterprise-grade data security and access controls.

Its domain-specific responses cater to various industries, including:

- Aerospace

- Defense

- Financial services

- Healthcare

- Manufacturing

- Oil & gas

- Telecommunications

- Utilities

With tailored solutions for business processes and enterprise systems, C3 generative AI marks a significant milestone in deploying domain-specific generative AI models, offering unprecedented efficiency and insights across diverse sectors.

5. DataRobot: Bridging the Generative AI Confidence Gap

DataRobot stands as a pivotal player in bridging the generative AI confidence gap, delivering tangible real-world value in the ever-evolving landscape of AI. With over a decade of expertise, DataRobot offers an open, end-to-end AI lifecycle platform, empowering teams to navigate the intricacies of the AI landscape confidently. Unifying predictive and generative AI workflows, DataRobot eliminates silos and simplifies the deployment of high-quality generative AI applications.

The platform ensures adaptability by allowing innovation with the best-of-breed components across cloud environments while maintaining security and cost control. With a proven track record across multiple domains, DataRobot is committed to supporting organizations in making informed decisions today that shape tomorrow’s opportunities, showcasing how generative AI can drive real-world value.

6. Xebia GenAI Platform: Addressing GenAI Deployment Challenges

Acknowledging the challenges in deploying GenAI models, especially regarding data privacy and security, Xebia offers an innovative GenAI Platform. This Machine Learning Operations (MLOps) solution, an extension of the Xebia Base, is meticulously designed to facilitate the integration of GenAI-powered applications into existing infrastructures. The platform covers crucial components ensuring an end-to-end lifecycle for GenAI models, such as:

- Models

- Prompts

- Monitoring

- Interfaces

- Cloud and data foundations

This approach addresses the challenges of deploying GenAI in production and provides benefits such as:

- Model standardization

- Version control

- Model governance

- Scalability

- Cost optimization

Leveraging transfer learning, Xebia’s GenAI Platform empowers businesses to unlock the potential of generative AI, driving innovation and efficiency while ensuring the utmost security and control.

7. Prophecy: Simplifying GenAI for Private Enterprise Data

Prophecy is a generative AI platform focusing on the unique challenges posed by private enterprise data. In a landscape where building GenAI applications often encounters hurdles related to data complexities, Prophecy stands out by simplifying the integration of generative AI into existing infrastructures. Offering a two-step solution for data engineers, Prophecy facilitates the creation of GenAI apps on any enterprise data within a week. Prophecy ensures a streamlined and efficient process on unstructured data to build a knowledge warehouse and implement streaming ETL pipelines for inference by running:

- Extract

- Transform

- Load (ETL)

The platform’s key features include ETL pipelines on Apache Spark, unstructured data transformations, support for:

- Multiple large language models

- Vector database storage

- At-scale execution on Spark

Prophecy’s emphasis on simplicity, scalability, and orchestration makes it a pivotal player in unleashing the full potential of generative AI for enterprise applications.

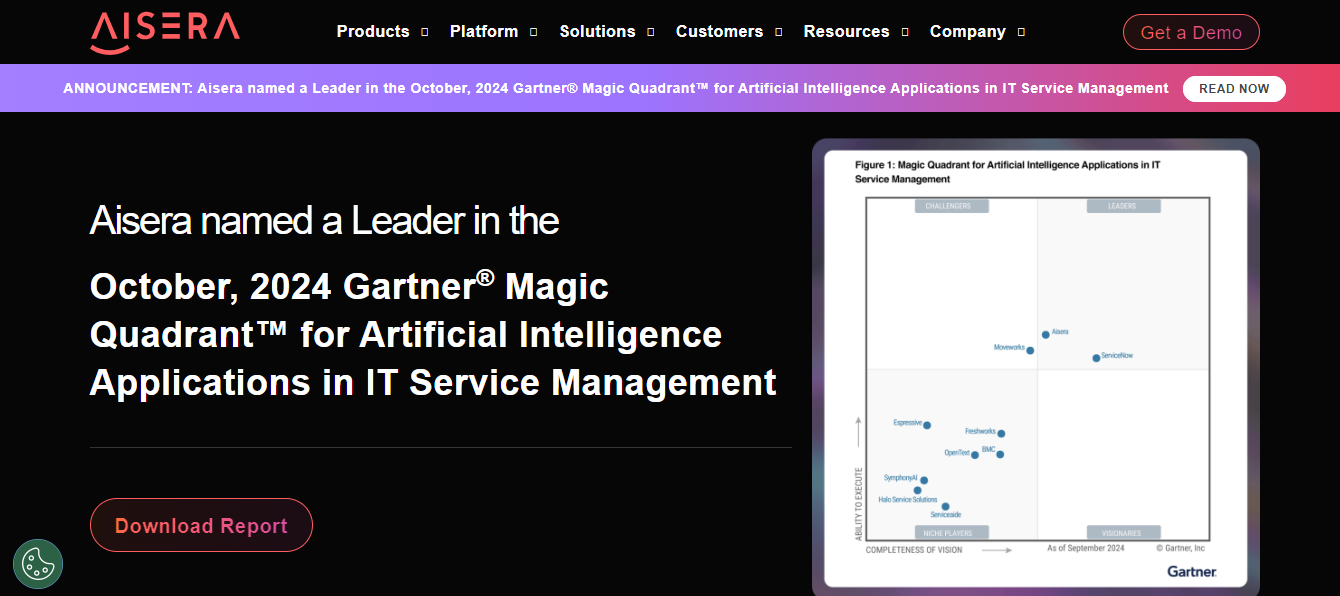

8. Aisera: A Comprehensive, Enterprise-Ready GenAI Solution

Aisera emerges as a frontrunner in the generative AI landscape, offering a comprehensive suite of solutions tailored to enterprise needs. The platform’s key offerings include AiseraGPT, an intuitive turnkey solution featuring action bots powered by domain-specific LLMs, and AI Copilot, a concierge bot with proactive notifications and customizable prompts. Aisera’s Enterprise AI

Search ensures personalized and permission-aware results, while AiseraLLM allows users to build and operationalize their LLMs in weeks. Recognized as a leader in GPT and generative AI, Aisera stands out for its commitment to responsible AI, privacy, and security, making it a top choice for organizations seeking customizable yet out-of-the-box virtual agents.

9. Coveo: Generative AI for Business Relevance

Coveo stands at the forefront of the generative AI transformation with its Relevance AI Platform, offering a robust solution tailored for enterprise readiness. The platform’s generative answering delivers accurate and trustworthy answers that enhance user experience, featuring:

- Designed for e-commerce

- Website

- Workplace applications

Coveo utilizes mature, large language models to identify relevant snippets in diverse documents, generating factual, up-to-date responses with citations. The platform prioritizes enterprise security with:

- Stringent data security protocols

- Automatic data freshness

- Personalized, human-like tone responses

With an illustrious history of driving customer growth for over 18 years, Coveo is a pioneer in the generative AI landscape, empowering enterprises with cutting-edge AI capabilities.

10. Addepto: Improving Productivity Across Enterprises

Addepto’s enterprise generative AI platform is at the forefront of transformative innovation, offering organizations a comprehensive solution to build, deploy, and manage AI models. This cutting-edge platform utilizes advanced generative AI techniques to develop:

- Synthetic data

- Generate content

- Automate tasks

- Optimize processes

- Enhance efficiency

Addepto empowers businesses to unlock limitless possibilities with a focus on:

- Improved productivity

- Enhanced security

- Cost reduction

- Faster decision-making

- Scalability

- Enhanced creativity

The platform seamlessly incorporates with existing systems, ensuring:

- Data privacy

- Security

- Regulatory compliance.

Any industry can benefit from Addepto’s enterprise-generative AI platform whether in:

- Healthcare

- Finance

- Manufacturing

- Retail

11. Pega GenAI: Accelerating Business Innovation

Pega GenAI is a groundbreaking generative AI platform for enterprises that propels productivity and creativity to new heights. This transformative technology allows organizations to innovate rapidly, leveraging AI and automation to handle complex tasks, enabling teams to concentrate on high-value activities.

Pega GenAI facilitates responsible adaptation, offering enterprise-ready governance to instill confidence in AI-driven endeavors. From accelerating low-code app development and strategy optimization to streamlining customer touchpoints and unlocking insights in the back office, Pega GenAI delivers a suite of 20 generative AI-powered boosters seamlessly integrated into the Pega Infinity platform.

12. watsonx: IBM's Next Generation Conversational AI

watsonx (previously IBM Watson) is IBM’s new and improved conversationonal AI, an update on IBM Watson. It offers an AI studio for building and deploying:

- Custom apps

- Governance tools

- A robust virtual assistant platform.

Before ChatGPT was all the rage in the news, IBM Watson was making the rounds. It’s been a capable conversational AI useful for internal or customer chatbots for years. With watsonx, IBM has developed a more robust solution for similar use cases.

13. NVIDIA AI: Generative AI for Visual Content Creation

NVIDIA’s generative AI models are renowned for their performance and efficiency, particularly in graphics and visual content creation. NVIDIA’s AI solutions are widely used in:

- Gaming

- Entertainment

- Design industries

14. Microsoft Azure AI: Trusted AI Services for Business

Microsoft Azure AI provides a comprehensive range of AI services, including advanced generative models. Azure AI’s seamless integration with other Microsoft services makes it a popular choice for businesses leveraging AI for various applications.

15. Amazon Web Services: Scalable and Reliable AI Services

AWS offers robust AI services, including generative AI models that can be easily integrated into various applications. Essential services include:

- Amazon SageMaker is used for building and deploying models

- AWS DeepComposer is used for creating music

- AWS DeepArt is used for generating visual art

- Amazon Polly is used for lifelike text-to-speech

AWS’s AI services are scalable and reliable, making them suitable for businesses of all sizes.

16. Adobe Sensei: Generative AI for Creative Workflows

Adobe Sensei leverages generative AI to enhance creative workflows, providing:

- Image and video editing tools

- Content creation, and more

Adobe’s AI solutions are popular among creative professionals and enterprises alike.

17. Hugging Face: Open-Source Generative AI Applications

Hugging Face is an open-source AI company known for its state-of-the-art NLP models. Their transformers library is widely used in the industry for generative text applications, providing accessible and powerful AI tools.

18. Google Cloud Vertex AI: Enterprise AI with Robust MLOps Tools

Vertex AI is Google Cloud’s enterprise AI platform, with robust machine learning operations tools. It also offers access to:

- Gemini

- Search

- Other commercial AI models from Google

The Google AI sub-division created it. It’s a good choice for companies with robust data science expertise. Implementation can be challenging without the right know-how and experience, so it’s unsuitable for small companies without that expertise.

19. OpenAI API: The Foundation for Many GenAI Applications

OpenAI, best known for ChatGPT, launched an API (application programming interface) and developer platform in 2020. Recently, with the increased power of ChatGPT models, it has become very popular. Today, many SaaS apps use OpenAI’s API to use generative artificial intelligence to deliver a better experience.

The OpenAI API is most suitable for SaaS or app developers who want to deliver GenAI functionality to their users. For example, this could be a virtual assistant that answers questions about the app.

20. TensorFlow: A Machine Learning Platform for GenAI Models

TensorFlow is a machine learning platform that helps teams test and deploy various open-source models. The Google Brain team originally developed it for internal use. It’s suitable for aspiring ML students with its:

- Open-source models

- Robust training materials

- Certification program

It’s also a good platform for teams that want to expand and improve machine learning models if you don’t want to rely on finished models from commercial providers like OpenAI.

21. Dataiku: A Centralized AI Platform for GenAI Applications

Dataiku is a centralized AI platform with functionality like GenAI and AI-powered analytics. It offers no original AI models but allows you to leverage open-source models for various use cases. In addition, it offers pre-made applications powered by AI for use cases such as predictive analytics. It’s a solid option for smaller companies experimenting with open-source AI.

22. SAP Hana Cloud: A Data Management Platform for Machine Learning

SAP Hana Cloud is SAP’s intelligent database management platform for machine learning applications. It’s a solution built to store data uniquely through multi-model processing. It gives you the power of many traditional databases, like:

- Relational

- Document store

- Graph

- Geospatial

- Vector

This capability suits various AI use cases and large companies with big data sets. (It’s similar to other cloud platforms like Amazon Web Services in this sense.)

23. Pico

With Pico, app creation relies on prompts, is the best for app creation, making it accessible even for beginners. As you type your first prompt outlining the app’s functionality, a line showing the AI carefully creating the code in real time appears on the screen. As your application develops, line by line, you can observe the smooth transition between:

- HTML

- CSS

- JavaScript

The advantages of Pico are here:

- Approachable for beginners

- Includes a few advanced features, such as:

- Databases

- APIs

24. Framer

Framer is an AI website builder for designers and other creative workers. The platform includes strong AI design capabilities, like Auto Layout, which automatically adjusts the spacing and arrangement of website elements, and is best for website building. To get started, use one of their free website templates. Here are the advantages of Framer:

- High customization options from custom libraries to animation and typography.

- Robust features for adding effects and animations, including entrance animations and complex flows.

25. Notion AI

Notion AI brings generative capabilities to the popular productivity tool, enhancing workflows with AI-powered:

- Text generation

- Summarization

- Task automation

Notion AI is one productivity-enhancing aid, no matter your role within tech. It didn’t fail to provide instant answers by generating or improving content summarizing databases. This enables users to focus more on core tasks. The advantages of Notion AI are as follows:

- Assists with various tasks, such as generating summaries, drafting content, etc.

- Allows seamless integration of other tools.

- Understands the context of your workspace, helping generate more relevant suggestions.

26. Perplexity

Founded in 2022 by former AI researchers from OpenAI and Meta, Perplexity has rapidly emerged as one of the leading Gen AI platforms in search and information retrieval. Perplexity's AI-powered search engine stands out for its ability to provide concise, accurate answers to complex queries while attributing information directly within the text using hyperlinked citations. The platform's innovative approach has garnered significant attention from tech industry insiders and investors alike. In a critical vote of confidence, banking giant Softbank invested up to US$20m in Perplexity, helping it improve its services further.

27. AlphaCode

Developed by Google DeepMind, AlphaCode is an advanced coding tool capable of generating computer programs at a competitive level. It has achieved an estimated rank within the top 54% of participants in programming competitions, demonstrating its ability to solve new problems requiring:

- Critical thinking

- Logic

- Algorithmic knowledge

- Coding skills

- Natural language understanding

The tool uses transformer-based language models to generate code at an unprecedented scale and then filters the output to a small set of “promising programs”.

Google DeepMind, the team behind AlphaCode, consists of a diverse group of:

- Scientists

- Engineers

- Ethicists

- Other professionals

Their collective goal is to:

- Solve intelligence

- Advance scientific knowledge

- Benefit humanity through innovations

28. Adobe Sensei

Adobe's Sensei platform harnesses the power of cloud AI and machine learning to enhance creative experiences. It aims to:

- Deepen insights

- Boost creative expression

- Streamline tasks and workflows

- Enable real-time decision-making

Adobe recently announced several Gen AI innovations across its Experience Cloud, which are set to redefine how businesses deliver customer experiences. Adobe Sensei GenAI will utilize multiple large language models (LLMs) within Adobe Experience Platform, adapting to unique business needs. Adobe Firefly, a new family of creative Gen AI models, also focuses on generating images and text effects designed for safe commercial use.

29. DALL-E 3

DALL-E, which began as a research project, was introduced by OpenAI in January 2021 and works on the ChatGPT suite of offerings. It is remarkable for creating images through natural language prompts. New iterations have editing tools that allow you to edit some aspects of the photo's generation with a subsequent prompt. Following many iterations, including the DALL-E 2 system, which can combine concepts, attributes, and styles with greater sophistication, OpenAI has now released DALL-E 3. It is available to all:

- ChatGPT Plus

- Team

- Enterprise users

- Developers through an API

This tool can also expand images beyond their original canvas, creating expansive new compositions. Not only does it address ethical concerns about copying from artists’ source material, but DALL-E 3 understands significantly more nuance and detail than previous systems, allowing users to translate ideas into exceptionally accurate images easily. They've also employed advanced techniques to prevent the generation of photorealistic images of real individuals' faces, including those of public figures.

30. Bing AI

Microsoft launched its AI-powered Bing search engine in early 2023, integrating it into the Edge browser. This advanced search tool is capable of:

- Delivering improved search results

- More comprehensive answers

- New chat experience

- Content generation capabilities

These features are powered by OpenAI's latest and most advanced GPT-4 model. In addition to enhanced search functionality, Bing AI includes the Bing Image Creator. This feature makes Bing one of the few search platforms capable of generating written and visual content in one place, supporting over 100 languages.

31. GitHub Copilot

GitHub Copilot, a cloud-based AI tool, is a collaborative effort between:

- GitHub

- OpenAI

- Microsoft

The Gen AI-powered code completion tool, designed to help developers write code more efficiently, is available through GitHub personal accounts (GitHub Copilot for Individuals) or organisation accounts (GitHub Copilot for Business). Trained on billions of lines of code, GitHub Copilot can transform natural language prompts into coding suggestions across dozens of programming languages. It analyses the context from comments and existing code to instantly suggest individual lines and entire functions.

32. Claude

Anthropic, the company behind Claude, is committed to developing reliable AI systems and researching AI opportunities and risks. Claude 3, their latest model, offers improved performance and more extended response capabilities and can be accessed via API or through a new public-facing beta website, claude.ai.

It excels in various tasks, from engaging in sophisticated dialogue to generating creative content and following detailed instructions. Given that the models can understand more context, Anthropic says they can process more information. Alongside Claude 2, Anthropic offers a more economical alternative called Claude Instant. This model is capable of handling a range of tasks, including:

- Casual conversation

- Text analysis

- Summarisation

- Document comprehension

It provides businesses with flexible AI solutions to suit their needs.

33. Gemini

Google’s AI chatbot Gemini seeks to use a mix of database learning, compiled with live information, to provide its users with holistic and accurate answers to their queries. Spawned first as Bard in early 2023, it moved Bard to PaLM 2, a new language model, and then, almost exactly a year later, was rebranded to Gemini. Using RAG techniques, it draws on information from the web to provide fresh, high-quality responses. It is capable of following instructions and completing requests thoughtfully, answering questions, generating different creative text formats, answering mathematical questions, and creating code. Gemini is available in hundreds of countries worldwide and supports multiple languages, giving it a more universal edge than its contemporaries.

34. Cohere Generate

Cohere Generate is a large language model API service provided by Cohere designed for text generation tasks. It allows organizations to train custom AI versions on their data, helping the model better understand company-specific terminology and requirements. Features Of Generate:

- Multiple Language Support

- You can control the temperature of the response generated, which allows you to get the most appropriate response and control its randomness.

35. Character.ai

Character.ai is a platform that lets users design personalized AI assistants. Users can tailor traits like an assistant’s personality and avatar to fit their preferences, and the platform also has assistants for specific contexts readily available.

For more common applications, users can search for assistants based on categories like:

- Writing

- Learning

- Gaming

Type:

- Text

- Image

Price:

A free plan is available, with paid plans starting at $9.99 per month.

Use cases:

- Preparing responses for a job interview

- Practicing speaking a second language

- Receiving travel tips for a vacation destination

36. Midjourney

Midjourney generates images based on natural language prompts. The tool is accessible through its website or a Discord bot, which can be prompted to create an image using the “/imagine” command. Since its launch in 2022, Midjourney has become a popular (yet controversial) tool for publications, authors, journalists, and other creatives.

It even became the first platform of its kind to produce an image that won an actual art competition, sparking both wonder and widespread debate.

Type: Image

Price:

Plans start at $10 per month.

Use cases:

- Crafting graphics for social media posts

- Designing cover art for publications

- Creating visuals to be printed on company swag

37. Imagen 3

Imagen 3 is Google DeepMind’s latest image generator that can process naturally written prompts, so users don’t need to be skilled in prompt engineering. Imagen 3 is built to understand and capture finer details like textures, camera angles, and lighting, enabling users to produce images in a broader range of styles.

Out of caution, the DeepMind team used red teaming and thorough data labeling techniques to ensure Imagen 3 meets the company’s:

- Fairness

- Bias

- Safety standards

Type: Image

Price:

A free plan is available, but those who want to generate images of people need to sign up for the Gemini Advanced plan, which is $19.99 monthly.

Use cases:

- Producing graphics for presentation slides

- Designing personalized visuals for birthday cards

- Creating artwork for comics and graphic novels

38. Runway

Runway creates AI-generated images, animations, and 3D models using relative motion analysis to generate realistic motion graphics. Its underlying model, trained on images and videos, powers its text-to-video and image-to-video capabilities, offering precise control over style, structure, and camera movement.

Used in movies like Everything Everywhere All At Once, as well as music videos for artists like A$AP Rocky and Kanye West, Runway is designed for professionals in:

- Filmmaking

- Post-production

- Advertising

- Editing

- Visual effects

Type:

- Image

- Audio

- Video

Price:

A free plan, with paid plans starting at $12 per monthly user, is available

Use cases:

- Creating a music video

- Producing a short film

- Adding visual effects to a video

39. Consensus

Consensus is an AI search engine focused on academic research. Students and researchers can access a database of more than 200 million academic papers and refine their searches with prompt instructions, filters, and quality indicators that signal the authority of each source.

Researchers and clinicians can narrow their searches even further based on factors like:

- Methodology

- Study design

- Sample size

Type: Text

Price:

A free plan is available, with paid plans starting at $8.99 per month when billed annually.

Use cases:

- Finding credible sources for a research project

- Investigating how two topics relate to each other

- Searching for answers to medical-related questions

Related Reading

- Gen AI Architecture

- Generative AI Implementation

- Generative AI Challenges

- Generative AI Providers

- How to Train a Generative AI Model

- Generative AI Infrastructure

- AI Middleware

- Top AI Cloud Business Management Platform Tools

How To Choose the Right Enterprise Generative AI Platform

1. Breadth of Use Cases

When selecting an enterprise-ready generative AI platform, the breadth of use cases is a critical first consideration. An enterprise-ready generative AI platform must handle many use cases across business functions and industries. Platforms offering pre-built solutions, industry-specific models, and customizable workflows can help tackle all sorts of challenges, such as:

Customer Support Automation

Generative AI can power intelligent virtual assistants and chatbots that understand customer queries, provide accurate responses, and resolve issues efficiently, reducing response times and improving customer satisfaction.

Employee Experience Automation

A universal virtual assistant that can solve many self-service automation, answering from enterprise-wide knowledge bases to find the correct information at the right time. Its use cases including:

- IT service requests

- HR and recruitment automation

- Knowledge management capabilities

Enhancing Agent Experience

Enhance agent productivity to fulfill customer requests by automatically integrating and interacting with enterprise APIs in real-time:

- Coaching

- Playbook adherence

- Agent performance monitoring

- Helping agents

App Co-pilot Experience

Provide the ability to be a co-pilot for enterprise applications.

Business Process Automation

Generative AI can automate repetitive and time-consuming tasks, freeing up human resources for higher-value activities, such as:

- Data analysis

- Data entry

- Document generation

- Workflow management

Content Generation

From product descriptions and marketing copy to campaigns and community posts, generative AI can streamline content creation processes, ensuring:

- Consistency

- Quality

- Scalability

Insights and Intelligence Decision Systems

By analyzing vast amounts of structured and unstructured data, generative AI can extract useful key information and generate insights to support data-driven decision-making.

2. Ease of Use and No-Code Capabilities

To democratize AI adoption, look for platforms with user-friendly interfaces and no-code tools like drag-and-drop model builders and visual workflow designers. This allows non-technical users to create and deploy generative AI applications, accelerating time-to-value and fostering collaboration between IT and business teams.

3. Advanced Language Model Orchestration

Advanced Language Model Orchestration is a critical capability for enterprise conversational AI platforms to maximize performance, cost, and scalability across diverse use cases and requirements. A well-designed conversational AI + generative AI platform should employ orchestration techniques to intelligently route requests to the most suitable language model based on:

- Task complexity

- Language

- Domain

- Resource availability

A robust platform ensures that the most appropriate model handles each user query by leveraging a combination of intent-based natural language understanding (NLU) and large language models (LLMs), maximizing accuracy and efficiency. This approach enables enterprises to leverage the strengths of different models, such as using intent-based NLU for structured and deterministic interactions while harnessing the power of LLMs for more open-ended and contextual conversations.

The Importance of Model Orchestration in Tailoring Generative AI Solutions for Enterprises

Moreover, advanced model orchestration capabilities allow for fine-tuning models to specific industry domains and use cases, further enhancing performance and relevance. This is particularly valuable for enterprises with particular compliance, security, and business logic requirements, as it ensures that the generative AI solution is tailored to their unique needs.

In contrast, platforms that offer robust language understanding and generation capabilities through state-of-the-art models but need granular control and orchestration may lead to challenges in adhering to business compliance needs, suboptimal resource utilization, and higher costs. By prioritizing advanced language model orchestration, generative AI platforms enable enterprises to:

- Balance performance

- Cost

- Scalability

4. Knowledge Integration and Retrieval Augmented Generation (RAG)

Knowledge Integration and Retrieval Augmented Generation (RAG) capabilities can enhance the accuracy and relevance of generative AI outputs by integrating enterprise knowledge sources. Look for a platform that offers advanced RAG features, including:

Vector Search

Ability to understand the intent behind user queries and retrieve the most relevant information from enterprise knowledge bases with the help of semantic embeddings and vector search.

Data Ingestion Pipelines

Automated processes from various sources into a unified text vector index knowledge repository for:

- Extracting

- Transforming

- Loading data

Context and Metadata Enrichment

Enhancing retrieved information with additional context, such as generative topics, categories, and relationships, improves understanding and retrieval quality. The success of a RAG solution depends on the quality of context available in the documents indexed. As enterprise documents are not necessarily created keeping the context in mind, enriching the context of the extracted documents becomes very important.

Customizable Retrieval Algorithms

Flexibility to fine-tune retrieval algorithms based on enterprise-specific requirements, such as:

- Domain-specific terminology

- Metadata filters

- Business rules

- Access controls

Options to choose various strategies suitable for a collection of a type of document, as well as support combining these retrieval strategies (fusion of strategy).

Tools for Explainable AI

The platform should explain how the chunks at every stage of the pipeline help users understand and improve the quality of answer generation. These are:

- Extracted

- Indexed

- Retrieved

- Ranked

Ability to Monitor and Continuously Evaluate

The platform should offer capabilities to monitor and continuously evaluate retrieval performance and generate AI answer generation to alert administrators to incidents and allow administrators to take action swiftly for:

- Quality

- Fairness

- Completeness

Enterprise Readiness:

The platform should provide a wide range of:

- Connector capabilities

- Support for role-based access controls

- Inclusion/exclusion rules

- Sensitive information redaction

- The ability to monitor using insights

5. Hybrid Approach: Combining Traditional Conversational AI and Generative AI

Enterprises with existing investments in traditional conversational AI can benefit from platforms that seamlessly combine the strengths of both approaches, enabling more natural and contextual conversations. Supporting conversational AI seamlessly on multiple channels, including voice and text channels, could be very complex.

The platform should be able to handle conversational AI nuances such as complex contextual conversations and digressions. Similarly, it should support complex Voice AI nuances like integrations into advanced speech-to-text and text-to-speech engines, the ability to customize speech adaptation, and the ability to support human-like synthetic voice generation and interactive experiences nuances like repeat holds, etc.

6. Explainable AI and Model Governance

Transparency and accountability are crucial factors when implementing generative AI in enterprise settings. Opt for a platform that prioritizes explainable AI, providing insights into how models arrive at their outputs. This helps build trust among:

- Stakeholders

- Ensures compliance with regulatory requirements

- Enables informed decision-making.

Look for robust model governance features to maintain control over AI deployments and mitigate risks associated with model drift and biases, such as:

- Version control

- Performance monitoring

- Auditing

The platform should provide advanced model evaluation tools for subjective evaluation of the model and prompt performance across applications, the ability to see the review against parameters such as:

- Toxicity

- Bias

- Completeness

- Cohesiveness

- Factual correctness

7. Vertical and Domain-Specific Prebuilt Solutions

To accelerate enterprise adoption of generative AI, it is crucial for platforms to offer vertical and domain-specific prebuilt solutions. These solutions should include pre-trained models, workflows, and integrations tailored to specific industries and business functions, such as:

- Healthcare

- Finance

- Retail

- Customer service

By providing out-of-the-box functionality and industry-specific knowledge, prebuilt solutions can significantly reduce the time and effort required for enterprises to implement and derive value from generative AI. Moreover, these solutions should be built on a flexible and extensible platform, allowing organizations to customize and enhance them to meet their unique requirements. An enterprise generative AI platform that supports a wide range of prebuilt solutions across various verticals and domains, along with the ability to easily adapt and extend them, can greatly accelerate the adoption and success of generative AI in enterprise settings.

8. Scalability and Performance

Enterprise generative AI platforms must handle large-scale deployments, processing vast data and serving multiple concurrent users. Look for a platform with a scalable architecture that leverages technologies like event-based architectures and stateless horizontal scaling to adjust resources based on demand dynamically. It should also provide advanced performance optimization techniques to reduce latency and improve responsiveness, such as:

- Model compression

- Quantization

- Caching

The platform should offer flexibility in deployment options, supporting both cloud and on-premises environments to accommodate enterprise security and compliance requirements. This way, enterprises can choose the deployment model that best suits their needs and ensures they remain in control of their data and infrastructure costs.

9. Security and Compliance

Generative AI often involves processing sensitive enterprise data, so security and compliance are critical. Choose a platform that adheres to industry-standard security practices, such as:

- Sensitive data redaction

- Data encryption

- Access controls

- Data segmentation.

It should also comply with relevant regulations like:

- PCI

- GDPR

- HIPAA

- SOC 2

Look for these features to ensure the confidentiality and integrity of your enterprise information, such as:

- Data lineage

- Audit logs

- Secure data controls

10. Generative AI Tooling

Fine-tuned models are crucial for widespread AI adoption in enterprise applications. They enable models to adapt to enterprise data and business rules. A robust enterprise generative AI platform must provide capabilities to fine-tune various models, including commercial and community models of multiple sizes, and continuously support new industry models. Fine-tuning tools should support techniques lik:

- LoRA

- QLoRA, etc.

Effective Model Development and Deployment

They should be user-friendly enough for subject matter experts to quickly bring in enterprise data, clean it, and use it for fine-tuning. Model evaluation tools should enable collaborative team-level fine-tuning, provide necessary tooling for reinforcement learning with human feedback, and offer easy-to-use features for various collaborators and tasks.

Technical Requirements for Model Deployment

Deploying these models should be seamless in cloud or on-premises environments. The platform should provide sophisticated operational capabilities to automatically provision the right size GPU/TPU machine for each model:

- Ensure redundancy

- Enable automatic API endpoint deployments with endpoint protection

- Scale horizontally with load while provisioning only the required hardware

After all, hosting large language models can be a significant infrastructure cost for enterprises. The platform should handle all these model operations requiring extensive engineering expertise.

11. Empowering Enterprises to Build and Customize AI Models

To build enterprise-grade generative AI applications, you need a comprehensive set of tools and frameworks for data preparation, model training, testing, and deployment. An enterprise generative AI platform must provide intuitive and powerful tooling that enables developers and data experts to:

- Efficiently build

- Customize

- Deploy AI models

Model Fine-Tuning for Effective Generative AI

Prompt engineering is a critical aspect of this tooling. It allows teams to design, test, and refine prompts collaboratively to ensure high-quality, relevant, and accurate responses. The platform should also provide tools for fine-tuning pre-trained Large Language Models to create highly specialized and effective generative AI solutions. Equally important are tools for evaluating model outputs, both objectively and subjectively, at scale.

Evaluating and Optimizing Model Outputs

Objective evaluation tools should provide metrics and insights on accuracy, fluency, diversity, and coherence. In contrast, subjective evaluation tools should enable human reviewers to assess model outputs’ quality, relevance, and appropriateness across applications. To facilitate continuous model and prompt optimization, the platform should offer tools that allow teams to collaboratively:

- Analyze evaluation results

- Identify areas for improvement

- Efficiently iterate on model:

- Architectures

- Training data

- Prompts

- Fine-tuning strategies.

Advanced analytics and reporting capabilities should surface actionable insights from model performance data, user interactions, and business metrics. This way, enterprises can continuously adapt and improve their generative AI models to meet their evolving needs.

12. Enterprise-Grade Support and Expertise

Choose a platform provider with a proven track record to ensure smooth implementation and ongoing success of:

- Successful enterprise deployments

- Domain expertise across industries

- Comprehensive support services

Start Building GenAI Apps for Free Today with Our Managed Generative AI Tech Stack

Lamatic offers a comprehensive Generative AI tech stack that empowers teams to rapidly implement GenAI solutions without accruing tech debt. Our platform provides:

- Managed GenAI Middleware

- Custom GenAI API (GraphQL)

- Low Code Agent Builder

- Automated GenAI Workflow (CI/CD)

- GenOps (DevOps for GenAI)

- Edge deployment via Cloudflare workers

- Integrated Vector Database (Weaviate)

Lamatic empowers teams to rapidly implement GenAI solutions without accruing tech debt. Our platform automates workflows and ensures production-grade deployment on the edge, enabling fast, efficient GenAI integration for products needing swift AI capabilities.

Start building GenAI apps for free today with our generative AI tech stack.

Benefits of Lamatic’s Generative AI Stack

Lamatic’s Generative AI Stack helps teams accelerate the implementation of AI features:

- Its low-code interface simplifies development by allowing users to build applications with minimal coding.

- The stack also automates processes like testing and deployment so teams can focus on building innovative applications instead of getting bogged down in operational details.

- Lamatic’s solution eliminates tech debt by providing a production-ready framework that ensures GenAI applications are reliable and scalable.