In this edition

The Need for Speed

😆Your GenAI application is laughable! At least it will be if you don’t evolve it faster.

Last month we described how the traditional DevOps framework has to be adjusted to work well for LLM-connected applications (see DevOps for GenAI). Today we explore the role application design has on deployment velocity.

Modularity drives deployment velocity

Modular design is not new. Its many benefits are well established by Amazon and others that pivoted to service oriented architectures in the early 2000s. While modular design isn’t new, GenAI is. And it takes the need for modularity to a whole new level.

Isn’t this old news?

Modular design is easy once the pieces exist and you know how they fit together. Just as Legos allow us to create complex structures by snapping together simple, interchangeable parts, a modular approach to GenAI allows us to build sophisticated systems from simple components.

LLMs are obviously modular

It’s easy to see why foundation models should be treated as interchangeable modules. Not only does the steady drumbeat of new model releases force product teams to constantly re-evaluate the relative quality, speed and cost of different models, the need for failover options also demands that LLMs be “hot-swappable” in production GenAI systems.

❝

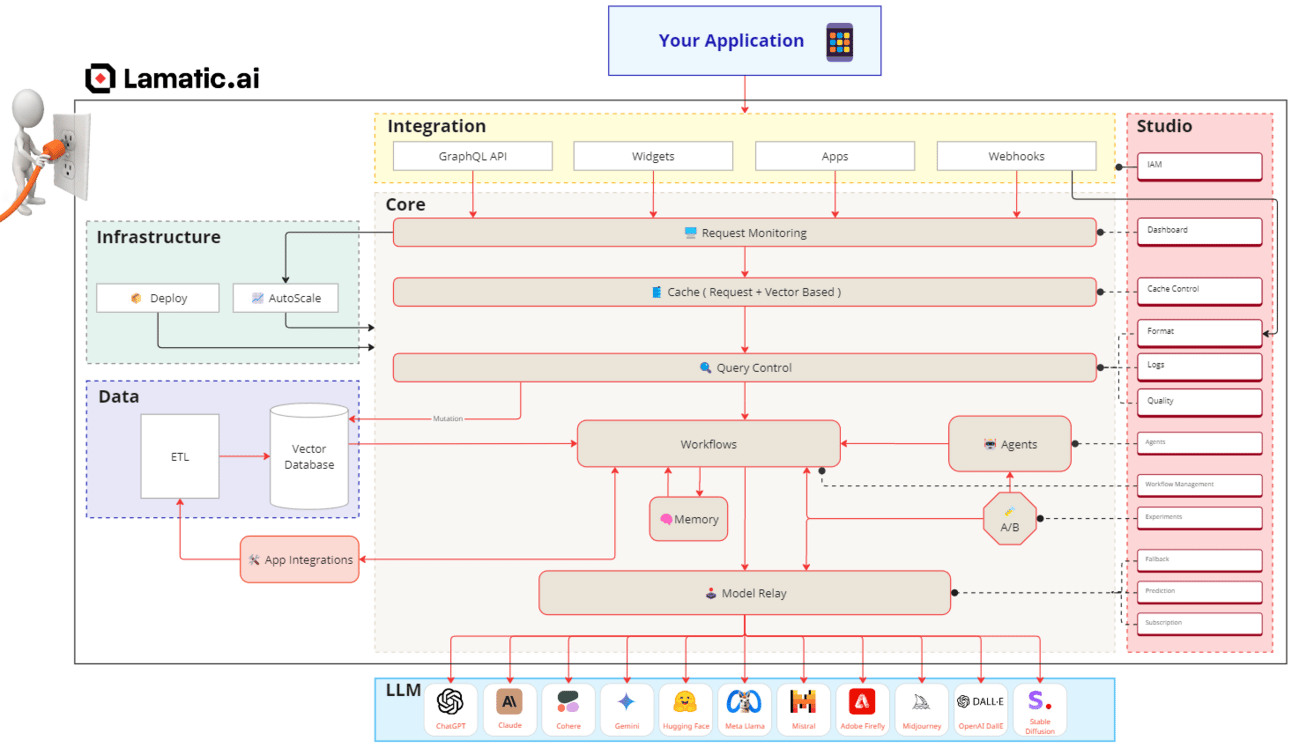

Lamatic.ai offers hot-swappable integrations to over 40 models from leading vendors as well as the ability to host and integrate private models.

AI workflows should be modular

GenAI builders quickly realize there are many ways to generate a given output. And new research is constantly suggesting alternative paths to evaluate. Effective evaluation requires that these workflows be easily modified and tested against one another. Modularity makes iterating and testing alternative workflows easier and faster.

Managed integrations offer flexibility & functionality

This one is less obvious, but integrations to best-of-breed subsystems offer a valuable form of modularity - especially given the speed of innovation across the GenAI ecosystem. These managed integrations make it possible to quickly add new capabilities to your GenAI tech stack. This best-of-breed approach also mitigates the risk of being stuck with inferior tools.

❝

Lamatic.ai’s drag-and-drop integrations to leading data sources, AI tools and front-end applications makes it easy to add new capabilities.

Abstraction Layer

History shows us that new technologies often give rise to middleware that itself creates modularity. For instance, the advent of the internet gave rise to Content Delivery Networks (CDNs). While CDNs provide sophisticated state-of-the-art capabilities, they also offer simplifying modularity to the companies that use them. In this same vein, Lamatic.ai delivers powerful capabilities while simultaneously reducing complexity and the amount of custom code companies must build and maintain.

❝

Helpful Analogy: CDNs like Cloudflare & Akamai add value to HTTP requests in ways that are similar to how Lamatic adds value to LLM requests (acceleration, quality, scalability, security, etc.).

Will It Gen?

As builders, we know it takes both imagination and know-how to create value. To spark this imagination & share this community’s collective know-how, we’re introducing a newsletter section called “Will It Gen?”. This section will drill down on interesting applications of GenAI - detailing the goal(s), exploring alternative approaches and examining the actual results.

We’d love to feature GenAI applications you’ve either built or are thinking about building. To suggest an application you’d like us to explore in an upcoming edition of “Will It Gen?”, click here.

Other Feedback:

We produce this newsletter for you! Please share your thoughts on what you’d like to see more (or less) of. Just join our Slack community (click below) or respond to this email with your feedback.